Description of Log Service. More...

Description of Log Service.

Computer programs record various events during their execution for further analysis. ASP Log Service provides the facility of recording the information about these events. Any application/ASP component in the cluster can use the Log Service to record the information.

Log Service persist the information recorded by its clients so that it is available for consumption at later points in time also. The consumer may be an offline consumer, consuming the information at some later point in time, or an online consumer, consuming the information as soon as it is generated. Log Service does not interpret the information recorded by its clients. It treats the information as octet stream and does not apply any semantic meaning to it.

All information related to one event is stored as one unit. This unit is known as Log Record. Log Records are grouped together based on certain client defined theme. This group is called Log Stream. These Log Streams can be shared by various components of an application or of different applications. The theme and the users of a Log Stream are defined by the application. These Log Streams flow into Handlers. One of handlers is the File handler which persist the Log Records into the Log File. Another one is Online Viewer, which is used to view the Log Records as they are generated. Log Service expects an archiving utility to siphon off Log Records from the Log File to other storage unit

Log Service provides interfaces for creating a Log Stream, opening an existing Log Stream, recording an event into an opened Log Stream and closing a Log Stream. Log service supports three different kind of logging. Those are Binary logging, TLV(Tag-Length-Value) logging and ASCII logging. By changing the msgId field of clLogWriteAsync() API, the application can choose their logging type. Further, it provides interfaces to change the properties of Log Streams and for setting filters on the Log Stream to filter events at the generation side. It also provides interfaces for registering interest in receiving certain Log Streams and interfaces to receive these streams.

Usage Model

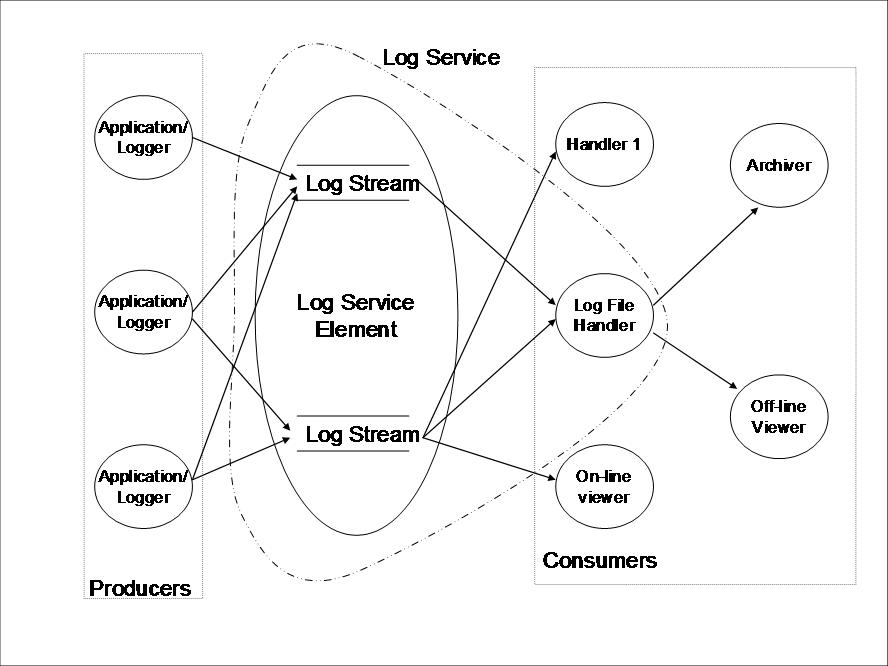

The usage model of the Log Service is Producer-Consumer model. Loggers are the producers of the Log Records whereas Log Stream Handlers are the consumers of these records. Multiple Loggers may log into same Log Stream. Similarly, same record may be consumed by many Log Stream Handlers simultaneously. The usage model can also be thought off as publisher-subscriber model because Loggers and Log Stream Handlers are unaware of each other and each Log Stream Handler receives all the logged records. One of the Log Stream Handler is Log File Handler that persist the Log Records in the Log File. Certain other applications like offline Log Viewer and Archiver work with Log File Handler.

Functionality

Basic purpose of the Log Service is to record information provided by the Logger for future use. This information is provided in the form of Log Record. Log Records flow through a Log Stream and are persisted by the Log Service in Log File.

The producer of Log Record is known as Logger which uses clLogWriteAsync()/ clLogVWriteAsync() API to pour a Log Record into a Log Stream. For using the services of Log Service, the Logger has to initialize the Log Service by invoking clLogInitialize(). clLogInitialize() returns a ClLogHandleT that can be used in the subsequent operations on the Log Service to identify the initialization. When this handle is no more required, the association can be closed by invoking clLogFinalize(). Subsequent to clLogInitialize(), the Logger gains access to a Log Stream by invoking clLogStreamOpen(). clLogStreamOpen() returns a ClLogStreamHandleT that can be used in clLogWriteAsync() API to identify the Log Stream. When a Log Stream is no more required by a Logger, it can close the stream by invoking clLogStreamClose(). Any Log Client can change the filter of a Log Stream by invoking clLogFilterSet().

Logger can also do ASCII logging by using the following two macros. They are clAppLog(), clLog(). Both of them do formatted ASCII logging. ASP components uses clLog() macro to log their messages to default sys stream. clLog() macro can log only into sys stream. But clAppLog() macro can log into any kind of streams. But both macors do only ASCII logging.

If the application uses clLog() macro, they can directly use the macro just after calling clLogInitialize() API. It holds good for using clAppLog() macro, but only for default streams such as appstream, sysstream which is defined in configuration file. If the user wants to log into their own stream by clAppLog() macro, then they can do logging only after opening the stream by clLogStreamOpen() call.

There are three types of consumers of the Log Records - one who consumes the Log Records on-line through Log Service, second who consume the Log Records at will through Log Service and others who consume the records by directly reading the file.

The first kind of consumers is who is consuming the records on-line. These consumers are known as Log Stream Handlers and they get records in a push mode. They are continuously waiting for the records and process them as soon as they get it. Examples of such consumers are Log File Handler and on-line viewer. These handlers initialize the Log Service by invoking clLogInitialize() and then they register their interest in a particular Log Stream by invoking clLogHandlerRegister(). These handlers can discover the active streams either by listening to the stream creation event or by getting the list of active stream by invoking clLogStreamListGet(). After registration, the handler starts getting the records through a callback ClLogRecordDeliveryCallbackT, which is registered during clLogInitialize(). Handler can acknowledge the receipt of the records by invoking clLogHandlerRecordAck(). When the handler is no more interested in receiving the Log Records for a particular Log Stream, it can deregister itself by invoking clLogHandlerDeregister().

The second kind of consumers is off-line consumer. These consumers operate in the pull mode and they explicitly ask the Log Service for more Log Records, if available. Another important difference with the first kind of consumers is that these work on Log File instead of Log Stream. Examples of such consumers are Log Reader and Archiver. These consumers also initialize the Log Service by invoking clLogInitialize() and then they open a Log File by invoking clLogFileOpen(). When these consumers need more records, they invoke clLogFileRecordsGet(). These type of consumers invoke clLogFileMetaDataGet() to get the metadata of the Log Streams persisted in the Log file. When the consumer is done with the file, it can close the file by invoking clLogFileClose().

The third kind of consumers is off-line consumer who may not be present in the cluster itself. This kind of consumers is out of scope of Log Service. These consumers directly operate on the Log File. Off-line viewer is one such consumer.

Log Service provides at-most once guarantee. Log Records may be lost due to many reasons. Some of the reasons are highlighted here. Typically Log Files have limited space and if the archiver is not configured correctly, the Log File may be full before the archiver can take out certain Log Records to create space for more. In this case, the Log Service will continue to overwrite oldest records and those overwritten records are lost. Other reason of loss is that a node fails before it could flush recently generated Log Records to the Log File Handler for persistence. Similarly, if the speed of flushing of new Log Records is slower than the rate of generation of Log Records, then the local memory of the Log Service Element may become full and it will start overwriting oldest Log Records, which will be lost.

Each Log Stream is characterized by a set of attributes. These attributes are specified at the time of creation of the Stream and can not be modified during the lifetime of that Stream. The attributes include the file name and the location of the file where the Stream should be persisted, size of each record in the Log Stream, maximum number of records that can be stored in one physical file, action to be taken when the file becomes full, whether the file has a backup copy and the frequency at which the Log Records must be flushed. These attributes are specified through ClLogStreamAttributesT structure.

Multiple Log Streams may be persisted in one Log File. Local and Global streams can be mixed together in one Log File. But, in such a case, all the stream attributes other than flushFreq and flushInterval must be same.

Log File is a logical concept and it is a collection of physical files. Each such physical file is called a Log File Unit. In case the action on Log File being full is specified as CL_LOG_FILE_FULL_ACTION_HALT or CL_LOG_FILE_FULL_ACTION_WRAP, the Log File consists of only one Log File Unit, whereas if the action is CL_LOG_FILE_FULL_ACTION_ROTATE, one Log File contains multiple Log File Units. Each Log File Unit is of same size specified as fileUnitSize in Log Stream Creation attributes. Maximum number of Log File Units in a Log File is governed by maxFilesRotated attribute of Log Stream. The Log File Unit names follow the following pattern: fileName_<creationTime>, where fileName is a stream attribute and <creationTime> is the wall clock time at which this Log File Unit is created, this time is in ISO 8601 format. One more file per Log File is generated with the name filename_<creationTime>.cfg to store the metadata of Log Streams being persisted in the Log File. This file contains attributes of the Log Streams going in this file and streamId to streamName mapping