(→Deployment Machine Prerequisites) |

|||

| (98 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Preface == | == Preface == | ||

''PostgreSQL Database Integration'' guide provides the system requirements, running procedures for PostgreSQL SAFplus integration product. | ''PostgreSQL Database Integration'' guide provides the system requirements, running procedures for PostgreSQL SAFplus integration product. | ||

=== Audience === | === Audience === | ||

| − | ''PostgreSQL Database Integration'' is designed for system integrators, designers, and developers. To use the product, you must be aware of the fundamentals of design, development, | + | ''PostgreSQL Database Integration'' is designed for system integrators, designers, and developers. To use the product, you must be aware of the fundamentals of design, development, operations, management and configuration of SAFplus and PostgreSQL database. You also must be familiar with C programming, PostgreSQL database development and administration, UML notations, and have basic knowledge of Linux. |

| + | |||

=== Introduction === | === Introduction === | ||

| − | The | + | The ''PostgreSQL Database Integration'' feature lets a redundant Postgres database to be easily added to your cluster. The Postgres database servers will be running in Active/Standby mode. By using a “virtual” (or moving) IP addresses, the currently “active” database can be directly accessed by applications via a single well-known IP address. This allows your database applications to access the active Postgres database regardless of which machine it is being hosted on. |

| + | |||

| + | In addition your applications can make read-only queries to the standby postgres databases via well-known IP addresses. This allows read only PostgreSQL operations to be distributed across many database replicas. | ||

| + | |||

| + | This product is intended to be integrated into your SAFplus model. However a standalone model is provided for evaluation and testing purposes. | ||

| + | |||

| + | === PostgreSQL working model === | ||

| + | The following diagram shows the basic architecture of the evaluation model: | ||

| + | |||

| + | [[File:postgreSQL_replication_diagram.jpg]] | ||

| + | |||

| + | In this diagram, there are 4 “well-known” virtual IP addresses defined by the administrator: 192.168.128.130, 192.168.128.114, 192.168.128.115 and 192.168.128.116 for a system that contains 3 redundancy database servers: PyNodeI0, PyNodeI1 and PyNodeI2. In this case, 192.168.128.130 will move so that it is ALWAYS the IP address of the active (read/write) Postgres database server, and the others provide access to the standby servers. The database client application can access the database via these IP addresses. Specifically, if the application wants to write to the database, it uses the active IP. If it wants to read from the database, it uses either the active or the standby IP addresses. | ||

| + | |||

| + | When SAFplus running on all three nodes, there are three IP addresses assigned: 192.168.128.130 (active), 192.168.128.115 and 192.168.128.116 (standby replicas). IP 192.168.128.114 of the first machine is not assigned because its machine is active, so the active IP 192.168.128.130 is used instead (we do not assign both addresses to ensure that lower priority "read"-only applications do not consume CPU time on the active database). | ||

| + | |||

| + | In the failure case, a standby will take over to become a new active. At this time, its old IP address 192.168.128.115 is released and the IP 192.168.128.130 is assigned to new active. Clients lose their active database connections, but are able to reconnect promptly. | ||

| + | |||

| + | This architecture allows an unsophisticated database client to simply access the database to read and write via 192.168.128.130. Whenever the database connection is dropped, clients should attempt to reconnect in a loop. But if read access optimization is needed, a slightly more sophisticated client can attempt to connect to the “standby” addresses (114,115,116) first, and only connect to the active if no standbys exist. | ||

| + | |||

| + | When the first machine recovers or is replaced, it becomes a standby and the virtual IP 192.168.128.114 is assigned. | ||

| + | |||

| + | |||

| + | The following tutorial describes how to compile, deploy, configure and run this example model. | ||

| + | |||

| + | == PostgreSQL Database Integration Tutorial Model == | ||

| + | |||

| + | === Naming Conventions === | ||

| + | The SAFplus model has defined some images (tarballs) to be deployed on separate nodes in your cluster. These images are as follows: | ||

| + | * SCNodeI0, SCNodeI1. These are the system controllers (SC). By default, they are also are configured to run an Active/Standby Postgres server service group. You must run at least one SC in your cluster. | ||

| + | * PyNodeI0, PyNodeI1, PyNodeI2 demonstrate a 1+2 Postgres server service group, and (combined with the SC nodes) demonstrate running 2 independent Postgres databases simultaneously. Running these is optional. | ||

| + | * PyTutorialI0 is a node that runs an example SA-forum compliant SQL application written in C. Running these is optional. | ||

| + | * PyTEST nodes run a test application. Running these is optional. | ||

| + | |||

| + | <br/> | ||

| + | If you want to test a configuration not defined in this model, you may either load the model into the OpenClovis IDE and make the required modifications or import portions of this model into your own model. | ||

| + | <br/> | ||

| + | |||

| + | |||

| + | There are three users mentioned in this tutorial that must exist across all nodes in the cluster: | ||

| + | |||

| + | # '''root''' is the super user on a machine (node). SAFplus is generally run as "root" (although this is not required). | ||

| + | # '''postgres''' The PostgreSQL database can't be run as root so a normal Linux user is generally created for this purpose. | ||

| + | # '''postgres''' The PostgreSQL database ''itself'' contains users for access control to database tables. For simplicity, we use the same username for admin access inside the database as we use for the Linux user that controls the postgres database process. | ||

| + | |||

| + | {{note | Some simplifications have been used for the purposes of this tutorial. These simplifications are generally not limitations in the actual implementation. In this case, it is actually not required to have the same Linux and database usernames, or even to have the same usernames on each node.}} | ||

| + | |||

| + | == Building the Software == | ||

| + | |||

| + | This tutorial was built for machines running Ubuntu Linux. You may need to adjust various commands to match your distribution. | ||

| + | |||

| + | === Install Required Software === | ||

| + | * Install SAFplus SDK-6.0 (or higher) | ||

| + | * Download and install PostgreSQL-9.1 (or higher) on your development machine | ||

| + | <code><pre>tar xvfj postgresql-9.1.3.tar.bz2; cd postgresql*; ./configure; make; make install | ||

| + | </pre></code> | ||

| + | * Download and detar the OpenClovis PostgreSQL Database Integration model (this software). It should create a directory named SAFplus-DB in your system. | ||

| + | |||

| + | |||

| + | === Build the Model === | ||

| + | |||

| + | This model is built like any other SAFplus model. The following commands should work for typical SAFplus installations. Please see the SAFplus documentation if you have issues. | ||

| + | |||

| + | <code><pre> | ||

| + | cd SAFplus-DB | ||

| + | /opt/clovis/sdk-*/src/SAFplus/configure --with-model-name=postgreSQL | ||

| + | cd postgreSQL/build/local | ||

| + | make | ||

| + | make images | ||

| + | </pre></code> | ||

| + | |||

| + | At this point, the build will have created deployment images located at: | ||

| + | <base dir>/SAFplus-DB/target/postgreSQL/images/ | ||

| + | |||

| + | consisting of SCNodeI0.tgz, SCNodeI1.tgz, PyNodeI0.tgz, PyNodeI1.tgz, PyNodeI2.tgz, and TutorialI0.tgz. We will copy these image files to nodes in our test cluster, but first let us set up those nodes. | ||

| + | |||

| + | == Deployment Machine Prerequisites == | ||

| + | * Install PostgreSQL-9.1 (or higher but must match with PostgreSQL version used in development machine) on all machines in the cluster'''''(PyNodeI0, PyNodeI1, PyNodeI2), Tutorial nodes (PyTutorialI0, PyTutorialI1) and PyTEST (PyTESTI0)''''' | ||

| + | <code><pre>tar xvfj postgresql-9.1.3.tar.bz2; cd postgresql*; ./configure; make; make install | ||

| + | </pre></code> | ||

| + | * Install the "expect" tool on the Postgres server machines '''''(ScNodeI0, ScNodeI1, and PyNodeI0, PyNodeI1, PyNodeI2 if you are using them)''''' | ||

| + | <code><pre>sudo apt-get install expect | ||

| + | </pre></code> | ||

| + | * Ensure that the rsync tool is on the Postgres server machines '''''(ScNodeI0, ScNodeI1, and PyNodeI0, PyNodeI1, PyNodeI2 if you are using them)''''' and in the user execution's PATH | ||

| + | <code><pre> | ||

| + | $ which rsync || echo “rsync is NOT accessible” | ||

| + | /usr/bin/rsync | ||

| + | </pre></code> | ||

| + | |||

| + | === Create a database === | ||

| + | If there are Postgres databases available (you have already created them), there is nothing to do. But you must know the super user and password on that database or create a new user both to run the postgres software and to administer the database. | ||

| + | |||

| + | In this tutorial, we will use a fresh database. How to create a Postgres database is beyond the scope of this tutorial. However the following “recipe” can be used (creating the database exactly as per this recipe will mean less customization of the tutorial example application later since the example application needs to access this database). Due to postgres restrictions, this has to be done as the non-root user that will run the postgres server. In this tutorial we will use the “postgres” user. If you do not have a “postgres” user you can either use a different user (please remember it for later usage) or create one using the “adduser” Linux command. However, as a new user, it is recommended that you use the "postgres" username so you do not have to modify configuration files to match your username. | ||

| + | |||

| + | Please run these steps on every database server node: | ||

| + | |||

| + | * '''''Initialize the database storage''''' | ||

| + | <code><pre> | ||

| + | # adduser postgres | ||

| + | |||

| + | # su - postgres | ||

| + | |||

| + | $ mkdir -p /home/postgres/pgsql/data | ||

| + | |||

| + | $ chmod -R 0700 /home/postgres/pgsql/data | ||

| + | |||

| + | $ initdb /home/postgres/pgsql/data | ||

| + | </pre></code> | ||

| + | |||

| + | {{note | It is important to do these steps as the "postgres" user so the running postgreSQL database has permissions to access the files. Additionally, make sure that the "data" directory has permissions 0700 (user read/write ONLY), as the postgreSQL database will refuse to use a database with less restrictive permissions.}} | ||

| + | |||

| + | Next, ''as the 'postgres' user'' we will create a database named "pgdb": | ||

| + | |||

| + | <code><pre> | ||

| + | $ pg_ctl start -D /home/postgres/pgsql/data -m fast | ||

| + | $ createdb pgdb | ||

| + | </pre></code> | ||

| + | <br/> | ||

| + | <div style="background-color:#a0a0a0"> | ||

| + | '''Optional:''' | ||

| + | {| style="background-color:#c0c0c0" | ||

| + | | A default admin user has been added to the "pgdb" database that is the same username as your Linux username -- that is "postgres". So you do not need to add another user. But if you DID want separate Linux/Postgres usernames you would now create the user in the database: | ||

| + | | <pre> | ||

| + | $ psql pgdb | ||

| + | pgdb=# create user myusername with superuser password '123456'; | ||

| + | pgdb=# \q | ||

| + | </pre> | ||

| + | |} | ||

| + | </div> | ||

| + | <br/> | ||

| + | Finally, stop the postgres server, because we want to run it under SAFplus. | ||

| + | <code><pre> | ||

| + | pg_ctl -D /home/pguser/pgsql/data stop | ||

| + | </pre></code> | ||

| + | |||

| + | === Set up Trusted SSH === | ||

| + | |||

| + | To exchange the initial database, each database server machine has to be able to use "rsync" to copy the other's database. Please setup trusted ssh between the database machines so a password is not needed. In this tutorial example, we have 2 sets of database server machines SCNodeI0, SCNodeI1, and (optionally) PyNodeI0, PyNodeI1, and PyNodeI2 (remember these 2 groups of machines are running completely independent databases). We need to setup trusted ssh so that the Linux database user (in this case "postgres") on any database server machine in a group can ssh into any of the other machines in the same group without a password. For more information about how to accomplish this, please refer to the following article [[http://www.debian-administration.org/articles/152]].<br/><br/> But in this tutorial we can take one shortcut. Since all the database server nodes are interchangeable we can give them all the exact same private key. If your deployed system has dedicated, isolated, database server nodes, you can continue to use this trick (it actually more accurately models a failover as if it was just a server restart). However if your nodes also have other roles, you may want them to have different keys for security purposes. In this example, we have database server nodes with IP addresses .200, .201, and .202. | ||

| + | |||

| + | First, create the private key on .200: | ||

| + | <code><pre> | ||

| + | $ mkdir -p ~/.ssh | ||

| + | $ cd ~/.ssh | ||

| + | $ ssh-keygen -t rsa | ||

| + | Generating public/private rsa key pair. | ||

| + | Enter file in which to save the key (/home/postgres/.ssh/id_rsa): | ||

| + | Enter passphrase (empty for no passphrase): | ||

| + | Enter same passphrase again: | ||

| + | Your identification has been saved in /home/postgres/.ssh/id_rsa. | ||

| + | Your public key has been saved in /home/postgres/.ssh/id_rsa.pub. | ||

| + | The key fingerprint is: | ||

| + | <> | ||

| + | The key's randomart image is: | ||

| + | +--[ RSA 2048]----+ | ||

| + | | | | ||

| + | +-----------------+ | ||

| + | </pre></code> | ||

| + | |||

| + | Next, copy it to the other nodes in the group: | ||

| + | <code><pre> | ||

| + | $ scp id_rsa postgres@192.168.1.202:/home/postgres/.ssh/id_rsa | ||

| + | id_rsa 100% 1675 1.6KB/s 00:00 | ||

| + | $ scp id_rsa postgres@192.168.1.201:/home/postgres/.ssh/id_rsa | ||

| + | id_rsa 100% 1675 1.6KB/s 00:00 | ||

| + | </pre></code> | ||

| + | |||

| + | Next, copy the public portion to all the nodes (including itself): | ||

| + | <code><pre> | ||

| + | $ ssh-copy-id -i id_rsa.pub postgres@192.168.1.202 | ||

| + | $ ssh-copy-id -i id_rsa.pub postgres@192.168.1.201 | ||

| + | $ ssh-copy-id -i id_rsa.pub postgres@192.168.1.200 | ||

| + | </pre></code> | ||

| + | |||

| + | Finally test to make sure it worked by checking that no password is requested as you ssh to each node (you need to check all nodes in the group against all other nodes in the group): | ||

| + | |||

| + | From node .200: | ||

| + | <code><pre> | ||

| + | $ ssh postgres@192.168.1.201 | ||

| + | Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux | ||

| + | Ubuntu 10.04.3 LTS | ||

| + | |||

| + | Welcome to Ubuntu! | ||

| + | $ exit | ||

| + | $ ssh postgres@192.168.1.202 | ||

| + | Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux | ||

| + | Ubuntu 10.04.3 LTS | ||

| + | |||

| + | Welcome to Ubuntu! | ||

| + | </pre></code> | ||

| + | |||

| + | From node .201: | ||

| + | <code><pre> | ||

| + | $ ssh postgres@192.168.1.200 | ||

| + | $ ssh postgres@192.168.1.201 | ||

| + | </pre></code> | ||

| + | And so on... | ||

| + | |||

| + | |||

| + | === Copy the Model Images to your Cluster === | ||

| + | Please copy these to your test cluster. Note, you can access most of the example functionality by just deploying 3 images: SCNodeI0.tgz, SCNodeI1.tgz, and TutorialI0.tgz | ||

| + | Also, note that you can use your development machine, and virtual machines as nodes in the test cluster. | ||

| + | |||

| + | In this example, I have 3 nodes in my cluster: my development machine at 192.168.1.200 and 2 VMs at 192.168.1.201 and 192.168.1.202. | ||

| + | <code><pre> | ||

| + | root@ubuntu:~# mkdir db | ||

| + | root@ubuntu:~# cd db | ||

| + | root@ubuntu:~/db# tar xvfz /code/git/SAFplus-DB/target/postgreSQL/images/SCNodeI0.tgz | ||

| + | root@ubuntu:~/db# scp /code/git/SAFplus-DB/target/postgreSQL/images/SCNodeI1.tgz root@192.168.1.201:~ | ||

| + | root@192.168.1.201's password: | ||

| + | SCNodeI0.tgz 100% 41MB 40.7MB/s 00:01 | ||

| + | root@ubuntu:~/db# scp /code/git/SAFplus-DB/target/postgreSQL/images/TutorialI0.tgz root@192.168.1.202:~ | ||

| + | root@192.168.1.202's password: | ||

| + | SCNodeI1.tgz | ||

| + | </pre></code> | ||

| + | |||

| + | I then "sshed" into the VMs and detarred the images in a folder named "db" just like on my development machine. | ||

| + | |||

| + | == How to run the product == | ||

| + | |||

| + | ==== Step-by-step to run the model ==== | ||

| + | The model defined 5 working images: SCNodeI0, SCNodeI1 (the system controllers); PyNodeI0, PyNodeI1 & PyNodeI2 (payloads). You should have at least 3 nodes: 1 SCNodeIx, and 2 PyNodeIx to show most of the functionality of the product. | ||

| + | |||

| + | Users can add more system controllers ( in case of multi-system controllers support) and more database servers if needed. In this tutorial model, these images have to be deployed on separate machines but in your own model deploy these images in one machine. | ||

| + | |||

| + | First we need to prepare the machines '''on which the database (PyNodeX) will be deployed''': | ||

| + | |||

| + | # Choose your virtualIP, netmask and network interface devices which will be used in the application. They include 1 active virtualIP and 3 standby virtualIPs. These will need to be entered into the CSI at key ActiveServerIP (active virtualIP), ActiveServerNetmask (active netmask) and ActiveServerDev (active network interface device). The same for standbys: StandbyServerIP_PyNodeIx, StandbyServerNetmask_PyNodeIx, StandbyServerDev_PyNodeIx.<br/>''This tutorial uses IP addresses in the 192.168.56.xxx subnet. You may be able to use this subnet for your testing if it does not conflict with an existing subnet on your network.'' | ||

| + | # To exchange the initial database, each database server machine has to be able to use "rsync" to copy the other's database. Please setup trusted ssh between the database machines so a password is not needed. In this tutorial example, we have database server machines PyNodeI0, PyNodeI1, and PyNodeI2. We need to setup trusted ssh so that the database user (the username running the PostgreSQL database) on ANY of these machines can ssh into any of the other 2 database server machines without a password. For more information about how to accomplish this, please refer to the following article [[http://www.debian-administration.org/articles/152]].<br/><br/> But in this tutorial we can take one shortcut. Since all the database server nodes are interchangeable we can give them all the exact same private key. If your deployed system has dedicated, isolated, database server nodes, you can continue to use this trick. However if your nodes also have other roles, you may want them to have different keys for security purposes. In this example, we have database server nodes with IP addresses .200, .201, and .202 and the normal users on 3 machines are 'hung'. Note that if the 3 users on 3 three nodes are different, you can apply the method for each user on each node respectively. | ||

| + | |||

| + | First, create the private key on .200: | ||

| + | code><pre> | ||

| + | $ mkdir -p ~/.ssh | ||

| + | $ cd ~/.ssh | ||

| + | $ ssh-keygen -t rsa | ||

| + | Generating public/private rsa key pair. | ||

| + | Enter file in which to save the key (/home/pguser/.ssh/id_rsa): | ||

| + | Enter passphrase (empty for no passphrase): | ||

| + | Enter same passphrase again: | ||

| + | Your identification has been saved in /home/pguser/.ssh/id_rsa. | ||

| + | Your public key has been saved in /home/pguser/.ssh/id_rsa.pub. | ||

| + | The key fingerprint is: | ||

| + | <> | ||

| + | The key's randomart image is: | ||

| + | +--[ RSA 2048]----+ | ||

| + | | | | ||

| + | +-----------------+ | ||

| + | </pre></code> | ||

| + | |||

| + | Next, copy it to the other nodes: | ||

| + | <code><pre> | ||

| + | $ scp id_rsa pguser@192.168.1.202:/home/pguser/.ssh/id_rsa | ||

| + | id_rsa 100% 1675 1.6KB/s 00:00 | ||

| + | $ scp id_rsa pguser@192.168.1.201:/home/pguser/.ssh/id_rsa | ||

| + | id_rsa 100% 1675 1.6KB/s 00:00 | ||

| + | </pre></code> | ||

| + | |||

| + | Next, copy the public portion to all the nodes (including myself): | ||

| + | <code><pre> | ||

| + | $ ssh-copy-id -i id_rsa.pub pguser@192.168.1.202 | ||

| + | $ ssh-copy-id -i id_rsa.pub pguser@192.168.1.201 | ||

| + | $ ssh-copy-id -i id_rsa.pub pguser@192.168.1.200 | ||

| + | </pre></code> | ||

| + | |||

| + | Finally test to make sure it worked (you should check all 6 possibilities): | ||

| + | <code><pre> | ||

| + | $ ssh pguser@192.168.1.201 | ||

| + | Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux | ||

| + | Ubuntu 10.04.3 LTS | ||

| + | |||

| + | Welcome to Ubuntu! | ||

| + | |||

| + | $ ssh pguser@192.168.1.202 | ||

| + | Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux | ||

| + | Ubuntu 10.04.3 LTS | ||

| + | |||

| + | Welcome to Ubuntu! | ||

| + | |||

| + | $ ssh pguser@192.168.1.200 | ||

| + | ... | ||

| + | # ssh pguser@192.168.1.201 | ||

| + | (and so on) | ||

| + | </pre></code> | ||

| + | <ol start="3"> | ||

| + | <li>Run the ''configure'' script. This script is in place when you untar the tarball:<br/><code><pre>./configure --with-wal-log-dir=<full path to directory storing wal log> | ||

| + | --with-recovery-template-dir=<full path to directory storing recovery.conf template file> | ||

| + | --with-trigger-file-dir=<full path directory in which the trigger file will be created later on failover> | ||

| + | --with-postgres-data-dir=<full path to postgres database cluster directory> | ||

| + | --with-postgres-bin-dir=<full path to postgres database bin directory that contains postgres commands> | ||

| + | --with-database-name=<Name of database that we're working on in the postgres server> | ||

| + | --with-username=<normal user who controls the postgres database server> | ||

| + | --with-safplus-dir=<full path to the safplus directory that the safplus image is deployed on this machine> --help</pre></code> | ||

| + | <ul style="list-style: none;"> | ||

| + | <li>'''''--with-wal-log-dir''''': specify the full path to directory to store the wal log. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the wal log, this causes an error for postgreSQL</li> | ||

| + | <li>'''''--with-recovery-template-dir''''': specify full path to directory storing recovery.conf template file. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the recovery.conf template, this causes an error for standby postgreSQL server</li> | ||

| + | <li>'''''--with-trigger-file-dir''''': specify full path directory in which the trigger file will be created later on failover. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the trigger file later, this causes an error for postgreSQL</li> | ||

| + | <li>'''''--with-postgres-data-dir''''': specify full path to postgres database cluster directory you work on</li> | ||

| + | <li>'''''--with-postgres-bin-dir''''': specify full path to postgres database bin directory that contains postgres commands</li> | ||

| + | <li>'''''--with-database-name''''': Specify Name of database that we're working on in the postgres server</li> | ||

| + | <li>'''''--with-username''''': specify an normal user for whom you have set up the trusted ssh on this node controlling the postgreSQL such as: start DB, stop DB, replicate DB... Note that this username must be an available normal user in this node and must be different from root</li> | ||

| + | <li>'''''--with-safplus-dir''''': specify full path to the safplus directory where the safplus image is deployed on this machine</li> | ||

| + | <li>'''''--help''''': for help on this command</li></ul></ol> | ||

| + | <ins>'''Remarks:'''</ins> | ||

| + | # Parameters '''''--with-username''''', '''''--with-database-name''''' are mandatory, the others are optional and can be omitted. If they’re omitted, the script will use the default values: | ||

| + | #: If '''''--with-safplus-dir''''' is specified, wal log dir, trigger file dir and recovery template dir will be created in safplus directoy | ||

| + | #: If '''''--with-safplus-dir''''' is omitted, wal log dir, trigger file dir and recovery template dir will be created in the current directory | ||

| + | #: '''''--with-postgres-data-dir''''' has default value ''/usr/local/pgsql/data'' | ||

| + | #: '''''--with-postgres-bin-dir''''' has default value ''/usr/local/pgsql/bin'' | ||

| + | #: '''''--with-safplus-dir''''' has default value . (current directory) | ||

| + | # The script helps you to update safplus xml configuration files, it uses some additional default values not existed in the paramters list. They are: | ||

| + | #* postgresPort: use default port 5432 | ||

| + | #* postgresUsername: use “postgres” as the default username of postgreSQL. Note that “postgres” username must exist in your postgreSQL server on the machines | ||

| + | <ins>'''Typical use of the script:'''</ins> | ||

| + | By following below steps, you do not need to work with model using Openclovis IDE (skip the section [[#Work with model|Work with model]]). | ||

| + | # Go to postgreSQL model directory | ||

| + | # Build the model | ||

| + | # Make images | ||

| + | # Create SafplusPostgres directory to deploy the images on separate machines | ||

| + | # Copy images to SafplusPostgres directory at step 4 | ||

| + | # Change to root | ||

| + | # untar & unzip the images (the payloads) | ||

| + | # run ./configure --with-username=<an existing username on this machine> --with-database-name=<postgres database name> | ||

| + | |||

| + | For example: | ||

| + | ./configure --with-username=pguser --with-database-name=pgdb | ||

| + | |||

| + | <br/>Or you can add more parameters if you want. | ||

| + | |||

| + | # The script uses all default parameters mentioned above to configure the product. | ||

| + | # Open etc/clAmfDefinitions.xml to enter some values which the script hasn’t done for you, they are ActiveServerIP, ActiveServerNetmask, ActiveServerDev, StandbyServerIP_PyNodeI0, StandbyServerIP_PyNodeI1, StandbyServerIP_PyNodeI2, StandbyServerNetmask_PyNodeI0, StandbyServerNetmask_PyNodeI1, StandbyServerNetmask_PyNodeI2, StandbyServerDev_PyNodeI0, StandbyServerDev_PyNodeI1, StandbyServerDev_PyNodeI2. If the values of PostgresStandbyServerPort, StandbyServerPostgresDataDir, StandbyServerPostgresBinDir, PostgresUsername don’t match with your machines, please correct them. | ||

| + | # Open etc/asp.conf to change images configurations: node_addr, link_name... if necessary | ||

| + | The safplus can be started now. | ||

| + | |||

| + | ===== Work with model ===== | ||

| + | # Import the model into the OpenClovis IDE | ||

| + | # Open the postgresql component model | ||

| + | # Open PostgresqlCSI definition. Correct the values that has been setup at II.1.Preparation steps for each key. You have to input those values below (you need to do for PyNodeI0, PyNodeI1 & PyNodeI2): | ||

| + | #* ActiveServerIP: the active virtual IP. All write (or read also) SQLs must be through it | ||

| + | #* ActiveServerNetmask: subnet mask for the active server | ||

| + | #* ActiveServerDev: the ethernet device interface for the active server | ||

| + | #* StandbyServerIP_PyNodeI0: the virtual IP for standby server on which the first image (PyNodeI0) is deployed. All read only SQLs can be through it. Change PyNodeI0,PyNodeI1,PyNodeI2 | ||

| + | #* StandbyServerServerDev_PyNodeI0: the ethernet device interface for the first standby server | ||

| + | #* StandbyServerServerNetmask_PyNodeI0: the subnet mask for the first standby server | ||

| + | #* StandbyServerUsername_PyNodeI0: the user control the postgreSQL operations on first standby server. Notice that the username here must be the user specified at running configure script (see II.2.3) with option --with-username. For example, if --with-username=postgres, then the value for this key is postgres | ||

| + | #* PostgresStandbyServerPort_PyNodeI0: the port number the postgreSQL installed on the first standby server listens (default: 5432) | ||

| + | #* StandbyServerUsername_PyNodeI0: the username of the postgreSQL installed on the first standby server. This user should be a super user, it has at least read, write, replicate privilege on that database | ||

| + | #* StandbyServerPostgresDataDir_PyNodeI0: the full path to the postgreSQLdatabase cluster installed on the first standby server | ||

| + | #* StandbyServerPostgresBinDir_PyNodeI0: the full path to the postgreSQLexecutable files (commands) installed on the first standby server | ||

| + | #* StandbyServerPostgresTriggerFile_PyNodeI0: specify trigger file name (include full path and user-defined filename) on the first standby server. Notice that the path here must be the path specified at running configure script (see II.2.3) with option --with-trigger-file-dir. For example, if --with-trigger-file-dir=/home/postgres/trigger and user-defined trigger file name is pgsql.trigger.5432, then the value for this key is /home/postgres/trigger/pgsql.trigger.5432. This trigger file will be removed automatically after failover | ||

| + | #* PostgresRecoveryTemplateConfFile_PyNodeI0: specify recovery file name (include full path and recovery.conf) on the first standby server. Notice that the path here must be the path specified at running configure script (see II.2.3) with option --with-recovery-template-dir. For example, if --with-recovery-template-dir=/home/postgres/recoverytemplate, then the value for this key is /home/postgres/recoverytemplate/recovery.conf. | ||

| + | #* PostgresDatabaseName: the name of the postgreSQL database. Both active postgreSQL and the standbys use one | ||

| + | # After correcting all values, regenerate source by Generate Source command from menu Project: | ||

| + | #* Do backup source code | ||

| + | #* Do merge source code<br/>When generating source code compleles, copy all files from backup/app to src/app | ||

| + | # Add or remove images as your decision | ||

| + | # Build the model | ||

| + | # Make images. You can change the network interface for each image as your system | ||

| + | # Deploy system controllers (SCNodeI0 & SCNodeI1) and PyNodeIx on separate machines | ||

| + | # Start safplus under root for system controllers first then start PyNodeIx. The application must be run under root because only root can open port and set/clear the virtualIP address | ||

| + | |||

| + | === PostgreSQL tutorial model === | ||

| + | ==== Introduction ==== | ||

| + | This is the model which shows the user how to use the postgreSQL high availability. Refer to picture at the first page of this document for a good understand how the system works. | ||

| + | ==== Step by step to run the tutorial model ==== | ||

| + | # To make the tutorial work, the PostgreSQL working model (section A) have to be configured and work first | ||

| + | # Open the TutorialCSI. Enter some paramters as in the previous section | ||

| + | # Generate source code | ||

| + | # Edit the source code. All source code is put in clCompAppMain.c of Tutorial package. The current source code interacts with a sample database. Because we don’t know what table in your database is, so you have to edit the source code to be suitable for your database. What you have to do is in 2 functions: | ||

| + | #* cleanDb(): this function demonstrates a deletion of all records in a table. This deletion is only permitted on master (active) postgres server. You must change the table name in SQL DELETE statement:<br/>'''''res = PQexec(conn,"DELETE FROM weather");''''' | ||

| + | #* writeDb(): this function demonstrates an insertion of some records into a table. This is only permitted on master (active) postgres server. You must change the table name in SQL INSERT statement:<br/>'''''res = PQexecParams(conn,"INSERT INTO weather values($1,$2,$3)",<br/>as well as parameters: names, types... (refer to the sample code to see how to do that) | ||

| + | # Build and make images | ||

| + | # Deploy PyTutorialI0 and PyTutorialI1 on separate machines and start safplus on them. You’ll see the machine which contains the active CSI deletes records from a table and insert some new records to it. And the machine which contains the standby CSI continues reading records from the table on the standbys in turn | ||

| + | |||

| + | == Appendix == | ||

| + | ===== Changing the model’s configuration permanently ===== | ||

| + | Open src/config/clAmfDefinitions.xml and correct the values: | ||

| + | |||

| + | <csiType name="PostgresqlCSI"> | ||

| + | <rank>0</rank> | ||

| + | <nameValueLists> | ||

| + | <nameValue name="ActiveServerIP" value="192.168.56.120"/> | ||

| + | <nameValue name="ActiveServerNetmask" value="255.255.255.0"/> | ||

| + | <nameValue name="ActiveServerDev" value="eth0:1"/> | ||

| + | <nameValue name="StandbyServerIP_PyNodeI0" value="192.168.56.121"/> | ||

| + | <nameValue name="StandbyServerNetmask_PyNodeI0" value="255.255.255.0"/> | ||

| + | <nameValue name="StandbyServerDev_PyNodeI0" value="eth0:1"/> | ||

| + | <nameValue name="PostgresStandbyServerPort_PyNodeI0" value="5430"/> | ||

| + | <nameValue name="StandbyServerIP_PyNodeI1" value="192.168.56.122"/> | ||

| + | <nameValue name="StandbyServerNetmask_PyNodeI1" value="255.255.255.0"/> | ||

| + | <nameValue name="StandbyServerDev_PyNodeI1" value="eth0:1"/> | ||

| + | <nameValue name="PostgresStandbyServerPort_PyNodeI1" value="5432"/> | ||

| + | <nameValue name="StandbyServerIP_PyNodeI2" value="192.168.56.123"/> | ||

| + | <nameValue name="StandbyServerNetmask_PyNodeI2" value="255.255.255.0"/> | ||

| + | <nameValue name="StandbyServerDev_PyNodeI2" value="eth0:1"/> | ||

| + | <nameValue name="PostgresStandbyServerPort_PyNodeI2" value="5432"/> | ||

| + | <nameValue name="PostgresDatabaseName" value="mydb"/> | ||

| + | <nameValue name="StandbyServerUsername_PyNodeI0" value="pgres"/> | ||

| + | <nameValue name="StandbyServerUsername_PyNodeI1" value="tae"/> | ||

| + | <nameValue name="StandbyServerUsername_PyNodeI2" value="tae"/> | ||

| + | <nameValue name="StandbyServerPostgresDataDir_PyNodeI0" value="/usr/local/pgsql/data"/> | ||

| − | + | ===== Edit src/target.conf if you want to change the image configuration such as slot number, LINK (network interface)... ===== | |

| + | TRAP_IP=127.0.0.1 | ||

| + | INSTALL_PREREQUISITES=YES | ||

| + | INSTANTIATE_IMAGES=YES | ||

| + | CREATE_TARBALLS=YES | ||

| + | TIPC_NETID=1340 | ||

| + | SLOT_SCNodeI0=1 | ||

| + | SLOT_PyTutorialI0=6 | ||

| + | SLOT_PyTutorialI1=7 | ||

| + | SLOT_SCNodeI1=2 | ||

| + | SLOT_PyTESTI0=8 | ||

| + | SLOT_PyNodeI0=3 | ||

| + | SLOT_PyNodeI1=4 | ||

| + | SLOT_PyNodeI2=5 | ||

| + | LINK_SCNodeI0=eth1 | ||

| + | LINK_PyTutorialI0=eth1 | ||

| + | LINK_PyTutorialI1=eth1 | ||

| + | LINK_SCNodeI1=eth1 | ||

| + | LINK_PyTESTI0=eth1 | ||

| + | LINK_PyNodeI0=eth1 | ||

| + | LINK_PyNodeI1=eth1 | ||

| + | LINK_PyNodeI2=eth1 | ||

| + | ARCH=x86_64/linux-2.6.32 | ||

Latest revision as of 20:10, 6 September 2012

Contents |

[edit] Preface

PostgreSQL Database Integration guide provides the system requirements, running procedures for PostgreSQL SAFplus integration product.

[edit] Audience

PostgreSQL Database Integration is designed for system integrators, designers, and developers. To use the product, you must be aware of the fundamentals of design, development, operations, management and configuration of SAFplus and PostgreSQL database. You also must be familiar with C programming, PostgreSQL database development and administration, UML notations, and have basic knowledge of Linux.

[edit] Introduction

The PostgreSQL Database Integration feature lets a redundant Postgres database to be easily added to your cluster. The Postgres database servers will be running in Active/Standby mode. By using a “virtual” (or moving) IP addresses, the currently “active” database can be directly accessed by applications via a single well-known IP address. This allows your database applications to access the active Postgres database regardless of which machine it is being hosted on.

In addition your applications can make read-only queries to the standby postgres databases via well-known IP addresses. This allows read only PostgreSQL operations to be distributed across many database replicas.

This product is intended to be integrated into your SAFplus model. However a standalone model is provided for evaluation and testing purposes.

[edit] PostgreSQL working model

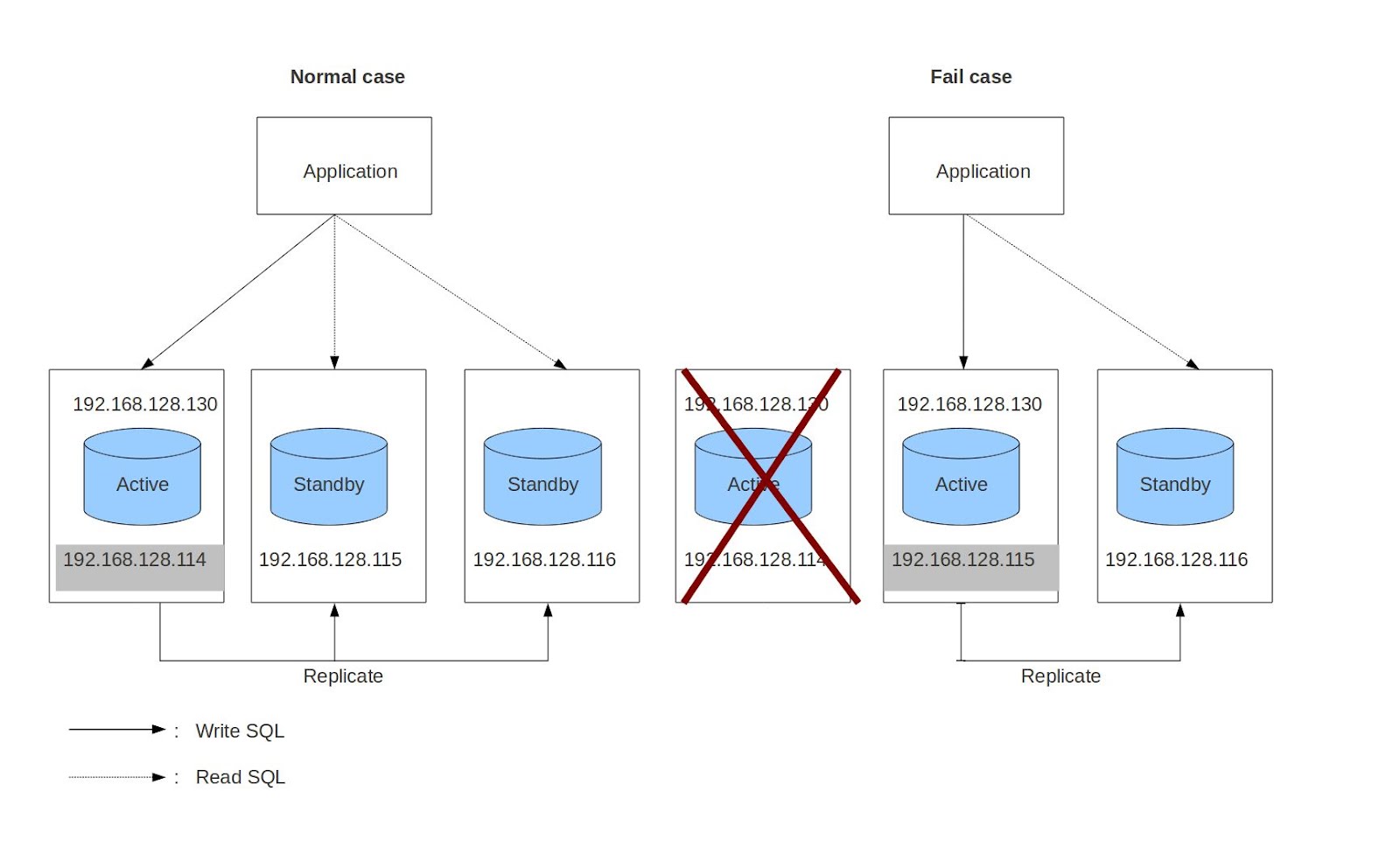

The following diagram shows the basic architecture of the evaluation model:

In this diagram, there are 4 “well-known” virtual IP addresses defined by the administrator: 192.168.128.130, 192.168.128.114, 192.168.128.115 and 192.168.128.116 for a system that contains 3 redundancy database servers: PyNodeI0, PyNodeI1 and PyNodeI2. In this case, 192.168.128.130 will move so that it is ALWAYS the IP address of the active (read/write) Postgres database server, and the others provide access to the standby servers. The database client application can access the database via these IP addresses. Specifically, if the application wants to write to the database, it uses the active IP. If it wants to read from the database, it uses either the active or the standby IP addresses.

When SAFplus running on all three nodes, there are three IP addresses assigned: 192.168.128.130 (active), 192.168.128.115 and 192.168.128.116 (standby replicas). IP 192.168.128.114 of the first machine is not assigned because its machine is active, so the active IP 192.168.128.130 is used instead (we do not assign both addresses to ensure that lower priority "read"-only applications do not consume CPU time on the active database).

In the failure case, a standby will take over to become a new active. At this time, its old IP address 192.168.128.115 is released and the IP 192.168.128.130 is assigned to new active. Clients lose their active database connections, but are able to reconnect promptly.

This architecture allows an unsophisticated database client to simply access the database to read and write via 192.168.128.130. Whenever the database connection is dropped, clients should attempt to reconnect in a loop. But if read access optimization is needed, a slightly more sophisticated client can attempt to connect to the “standby” addresses (114,115,116) first, and only connect to the active if no standbys exist.

When the first machine recovers or is replaced, it becomes a standby and the virtual IP 192.168.128.114 is assigned.

The following tutorial describes how to compile, deploy, configure and run this example model.

[edit] PostgreSQL Database Integration Tutorial Model

[edit] Naming Conventions

The SAFplus model has defined some images (tarballs) to be deployed on separate nodes in your cluster. These images are as follows:

- SCNodeI0, SCNodeI1. These are the system controllers (SC). By default, they are also are configured to run an Active/Standby Postgres server service group. You must run at least one SC in your cluster.

- PyNodeI0, PyNodeI1, PyNodeI2 demonstrate a 1+2 Postgres server service group, and (combined with the SC nodes) demonstrate running 2 independent Postgres databases simultaneously. Running these is optional.

- PyTutorialI0 is a node that runs an example SA-forum compliant SQL application written in C. Running these is optional.

- PyTEST nodes run a test application. Running these is optional.

If you want to test a configuration not defined in this model, you may either load the model into the OpenClovis IDE and make the required modifications or import portions of this model into your own model.

There are three users mentioned in this tutorial that must exist across all nodes in the cluster:

- root is the super user on a machine (node). SAFplus is generally run as "root" (although this is not required).

- postgres The PostgreSQL database can't be run as root so a normal Linux user is generally created for this purpose.

- postgres The PostgreSQL database itself contains users for access control to database tables. For simplicity, we use the same username for admin access inside the database as we use for the Linux user that controls the postgres database process.

|

Note: Some simplifications have been used for the purposes of this tutorial. These simplifications are generally not limitations in the actual implementation. In this case, it is actually not required to have the same Linux and database usernames, or even to have the same usernames on each node.

|

[edit] Building the Software

This tutorial was built for machines running Ubuntu Linux. You may need to adjust various commands to match your distribution.

[edit] Install Required Software

- Install SAFplus SDK-6.0 (or higher)

- Download and install PostgreSQL-9.1 (or higher) on your development machine

tar xvfj postgresql-9.1.3.tar.bz2; cd postgresql*; ./configure; make; make install

- Download and detar the OpenClovis PostgreSQL Database Integration model (this software). It should create a directory named SAFplus-DB in your system.

[edit] Build the Model

This model is built like any other SAFplus model. The following commands should work for typical SAFplus installations. Please see the SAFplus documentation if you have issues.

cd SAFplus-DB

/opt/clovis/sdk-*/src/SAFplus/configure --with-model-name=postgreSQL

cd postgreSQL/build/local

make

make images

At this point, the build will have created deployment images located at: <base dir>/SAFplus-DB/target/postgreSQL/images/

consisting of SCNodeI0.tgz, SCNodeI1.tgz, PyNodeI0.tgz, PyNodeI1.tgz, PyNodeI2.tgz, and TutorialI0.tgz. We will copy these image files to nodes in our test cluster, but first let us set up those nodes.

[edit] Deployment Machine Prerequisites

- Install PostgreSQL-9.1 (or higher but must match with PostgreSQL version used in development machine) on all machines in the cluster(PyNodeI0, PyNodeI1, PyNodeI2), Tutorial nodes (PyTutorialI0, PyTutorialI1) and PyTEST (PyTESTI0)

tar xvfj postgresql-9.1.3.tar.bz2; cd postgresql*; ./configure; make; make install

- Install the "expect" tool on the Postgres server machines (ScNodeI0, ScNodeI1, and PyNodeI0, PyNodeI1, PyNodeI2 if you are using them)

sudo apt-get install expect

- Ensure that the rsync tool is on the Postgres server machines (ScNodeI0, ScNodeI1, and PyNodeI0, PyNodeI1, PyNodeI2 if you are using them) and in the user execution's PATH

$ which rsync || echo “rsync is NOT accessible”

/usr/bin/rsync

[edit] Create a database

If there are Postgres databases available (you have already created them), there is nothing to do. But you must know the super user and password on that database or create a new user both to run the postgres software and to administer the database.

In this tutorial, we will use a fresh database. How to create a Postgres database is beyond the scope of this tutorial. However the following “recipe” can be used (creating the database exactly as per this recipe will mean less customization of the tutorial example application later since the example application needs to access this database). Due to postgres restrictions, this has to be done as the non-root user that will run the postgres server. In this tutorial we will use the “postgres” user. If you do not have a “postgres” user you can either use a different user (please remember it for later usage) or create one using the “adduser” Linux command. However, as a new user, it is recommended that you use the "postgres" username so you do not have to modify configuration files to match your username.

Please run these steps on every database server node:

- Initialize the database storage

# adduser postgres

# su - postgres

$ mkdir -p /home/postgres/pgsql/data

$ chmod -R 0700 /home/postgres/pgsql/data

$ initdb /home/postgres/pgsql/data

|

Note: It is important to do these steps as the "postgres" user so the running postgreSQL database has permissions to access the files. Additionally, make sure that the "data" directory has permissions 0700 (user read/write ONLY), as the postgreSQL database will refuse to use a database with less restrictive permissions.

|

Next, as the 'postgres' user we will create a database named "pgdb":

$ pg_ctl start -D /home/postgres/pgsql/data -m fast

$ createdb pgdb

Optional:

| A default admin user has been added to the "pgdb" database that is the same username as your Linux username -- that is "postgres". So you do not need to add another user. But if you DID want separate Linux/Postgres usernames you would now create the user in the database: | $ psql pgdb pgdb=# create user myusername with superuser password '123456'; pgdb=# \q |

Finally, stop the postgres server, because we want to run it under SAFplus.

pg_ctl -D /home/pguser/pgsql/data stop

[edit] Set up Trusted SSH

To exchange the initial database, each database server machine has to be able to use "rsync" to copy the other's database. Please setup trusted ssh between the database machines so a password is not needed. In this tutorial example, we have 2 sets of database server machines SCNodeI0, SCNodeI1, and (optionally) PyNodeI0, PyNodeI1, and PyNodeI2 (remember these 2 groups of machines are running completely independent databases). We need to setup trusted ssh so that the Linux database user (in this case "postgres") on any database server machine in a group can ssh into any of the other machines in the same group without a password. For more information about how to accomplish this, please refer to the following article [[1]].

But in this tutorial we can take one shortcut. Since all the database server nodes are interchangeable we can give them all the exact same private key. If your deployed system has dedicated, isolated, database server nodes, you can continue to use this trick (it actually more accurately models a failover as if it was just a server restart). However if your nodes also have other roles, you may want them to have different keys for security purposes. In this example, we have database server nodes with IP addresses .200, .201, and .202.

First, create the private key on .200:

$ mkdir -p ~/.ssh

$ cd ~/.ssh

$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/postgres/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/postgres/.ssh/id_rsa.

Your public key has been saved in /home/postgres/.ssh/id_rsa.pub.

The key fingerprint is:

<>

The key's randomart image is:

+--[ RSA 2048]----+

| |

+-----------------+

Next, copy it to the other nodes in the group:

$ scp id_rsa postgres@192.168.1.202:/home/postgres/.ssh/id_rsa

id_rsa 100% 1675 1.6KB/s 00:00

$ scp id_rsa postgres@192.168.1.201:/home/postgres/.ssh/id_rsa

id_rsa 100% 1675 1.6KB/s 00:00

Next, copy the public portion to all the nodes (including itself):

$ ssh-copy-id -i id_rsa.pub postgres@192.168.1.202

$ ssh-copy-id -i id_rsa.pub postgres@192.168.1.201

$ ssh-copy-id -i id_rsa.pub postgres@192.168.1.200

Finally test to make sure it worked by checking that no password is requested as you ssh to each node (you need to check all nodes in the group against all other nodes in the group):

From node .200:

$ ssh postgres@192.168.1.201

Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux

Ubuntu 10.04.3 LTS

Welcome to Ubuntu!

$ exit

$ ssh postgres@192.168.1.202

Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux

Ubuntu 10.04.3 LTS

Welcome to Ubuntu!

From node .201:

$ ssh postgres@192.168.1.200

$ ssh postgres@192.168.1.201

And so on...

[edit] Copy the Model Images to your Cluster

Please copy these to your test cluster. Note, you can access most of the example functionality by just deploying 3 images: SCNodeI0.tgz, SCNodeI1.tgz, and TutorialI0.tgz Also, note that you can use your development machine, and virtual machines as nodes in the test cluster.

In this example, I have 3 nodes in my cluster: my development machine at 192.168.1.200 and 2 VMs at 192.168.1.201 and 192.168.1.202.

root@ubuntu:~# mkdir db

root@ubuntu:~# cd db

root@ubuntu:~/db# tar xvfz /code/git/SAFplus-DB/target/postgreSQL/images/SCNodeI0.tgz

root@ubuntu:~/db# scp /code/git/SAFplus-DB/target/postgreSQL/images/SCNodeI1.tgz root@192.168.1.201:~

root@192.168.1.201's password:

SCNodeI0.tgz 100% 41MB 40.7MB/s 00:01

root@ubuntu:~/db# scp /code/git/SAFplus-DB/target/postgreSQL/images/TutorialI0.tgz root@192.168.1.202:~

root@192.168.1.202's password:

SCNodeI1.tgz

I then "sshed" into the VMs and detarred the images in a folder named "db" just like on my development machine.

[edit] How to run the product

[edit] Step-by-step to run the model

The model defined 5 working images: SCNodeI0, SCNodeI1 (the system controllers); PyNodeI0, PyNodeI1 & PyNodeI2 (payloads). You should have at least 3 nodes: 1 SCNodeIx, and 2 PyNodeIx to show most of the functionality of the product.

Users can add more system controllers ( in case of multi-system controllers support) and more database servers if needed. In this tutorial model, these images have to be deployed on separate machines but in your own model deploy these images in one machine.

First we need to prepare the machines on which the database (PyNodeX) will be deployed:

- Choose your virtualIP, netmask and network interface devices which will be used in the application. They include 1 active virtualIP and 3 standby virtualIPs. These will need to be entered into the CSI at key ActiveServerIP (active virtualIP), ActiveServerNetmask (active netmask) and ActiveServerDev (active network interface device). The same for standbys: StandbyServerIP_PyNodeIx, StandbyServerNetmask_PyNodeIx, StandbyServerDev_PyNodeIx.

This tutorial uses IP addresses in the 192.168.56.xxx subnet. You may be able to use this subnet for your testing if it does not conflict with an existing subnet on your network. - To exchange the initial database, each database server machine has to be able to use "rsync" to copy the other's database. Please setup trusted ssh between the database machines so a password is not needed. In this tutorial example, we have database server machines PyNodeI0, PyNodeI1, and PyNodeI2. We need to setup trusted ssh so that the database user (the username running the PostgreSQL database) on ANY of these machines can ssh into any of the other 2 database server machines without a password. For more information about how to accomplish this, please refer to the following article [[2]].

But in this tutorial we can take one shortcut. Since all the database server nodes are interchangeable we can give them all the exact same private key. If your deployed system has dedicated, isolated, database server nodes, you can continue to use this trick. However if your nodes also have other roles, you may want them to have different keys for security purposes. In this example, we have database server nodes with IP addresses .200, .201, and .202 and the normal users on 3 machines are 'hung'. Note that if the 3 users on 3 three nodes are different, you can apply the method for each user on each node respectively.

First, create the private key on .200:

code>$ mkdir -p ~/.ssh $ cd ~/.ssh $ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/pguser/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/pguser/.ssh/id_rsa. Your public key has been saved in /home/pguser/.ssh/id_rsa.pub. The key fingerprint is: <> The key's randomart image is: +--[ RSA 2048]----+ | | +-----------------+</code>

Next, copy it to the other nodes:

$ scp id_rsa pguser@192.168.1.202:/home/pguser/.ssh/id_rsa

id_rsa 100% 1675 1.6KB/s 00:00

$ scp id_rsa pguser@192.168.1.201:/home/pguser/.ssh/id_rsa

id_rsa 100% 1675 1.6KB/s 00:00

Next, copy the public portion to all the nodes (including myself):

$ ssh-copy-id -i id_rsa.pub pguser@192.168.1.202

$ ssh-copy-id -i id_rsa.pub pguser@192.168.1.201

$ ssh-copy-id -i id_rsa.pub pguser@192.168.1.200

Finally test to make sure it worked (you should check all 6 possibilities):

$ ssh pguser@192.168.1.201

Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux

Ubuntu 10.04.3 LTS

Welcome to Ubuntu!

$ ssh pguser@192.168.1.202

Linux ubuntu10043vm 2.6.32-38-generic #83-Ubuntu SMP Wed Jan 4 11:12:07 UTC 2012 x86_64 GNU/Linux

Ubuntu 10.04.3 LTS

Welcome to Ubuntu!

$ ssh pguser@192.168.1.200

...

# ssh pguser@192.168.1.201

(and so on)

- Run the configure script. This script is in place when you untar the tarball:

./configure --with-wal-log-dir=<full path to directory storing wal log> --with-recovery-template-dir=<full path to directory storing recovery.conf template file> --with-trigger-file-dir=<full path directory in which the trigger file will be created later on failover> --with-postgres-data-dir=<full path to postgres database cluster directory> --with-postgres-bin-dir=<full path to postgres database bin directory that contains postgres commands> --with-database-name=<Name of database that we're working on in the postgres server> --with-username=<normal user who controls the postgres database server> --with-safplus-dir=<full path to the safplus directory that the safplus image is deployed on this machine> --help

- --with-wal-log-dir: specify the full path to directory to store the wal log. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the wal log, this causes an error for postgreSQL

- --with-recovery-template-dir: specify full path to directory storing recovery.conf template file. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the recovery.conf template, this causes an error for standby postgreSQL server

- --with-trigger-file-dir: specify full path directory in which the trigger file will be created later on failover. If the directory doesn't exist, you have 2 options: y or n; if press y, the directory will be created (recommended); otherwise, there is no directory to store the trigger file later, this causes an error for postgreSQL

- --with-postgres-data-dir: specify full path to postgres database cluster directory you work on

- --with-postgres-bin-dir: specify full path to postgres database bin directory that contains postgres commands

- --with-database-name: Specify Name of database that we're working on in the postgres server

- --with-username: specify an normal user for whom you have set up the trusted ssh on this node controlling the postgreSQL such as: start DB, stop DB, replicate DB... Note that this username must be an available normal user in this node and must be different from root

- --with-safplus-dir: specify full path to the safplus directory where the safplus image is deployed on this machine

- --help: for help on this command

Remarks:

- Parameters --with-username, --with-database-name are mandatory, the others are optional and can be omitted. If they’re omitted, the script will use the default values:

- If --with-safplus-dir is specified, wal log dir, trigger file dir and recovery template dir will be created in safplus directoy

- If --with-safplus-dir is omitted, wal log dir, trigger file dir and recovery template dir will be created in the current directory

- --with-postgres-data-dir has default value /usr/local/pgsql/data

- --with-postgres-bin-dir has default value /usr/local/pgsql/bin

- --with-safplus-dir has default value . (current directory)

- The script helps you to update safplus xml configuration files, it uses some additional default values not existed in the paramters list. They are:

- postgresPort: use default port 5432

- postgresUsername: use “postgres” as the default username of postgreSQL. Note that “postgres” username must exist in your postgreSQL server on the machines

Typical use of the script: By following below steps, you do not need to work with model using Openclovis IDE (skip the section Work with model).

- Go to postgreSQL model directory

- Build the model

- Make images

- Create SafplusPostgres directory to deploy the images on separate machines

- Copy images to SafplusPostgres directory at step 4

- Change to root

- untar & unzip the images (the payloads)

- run ./configure --with-username=<an existing username on this machine> --with-database-name=<postgres database name>

For example:

./configure --with-username=pguser --with-database-name=pgdb

Or you can add more parameters if you want.

- The script uses all default parameters mentioned above to configure the product.

- Open etc/clAmfDefinitions.xml to enter some values which the script hasn’t done for you, they are ActiveServerIP, ActiveServerNetmask, ActiveServerDev, StandbyServerIP_PyNodeI0, StandbyServerIP_PyNodeI1, StandbyServerIP_PyNodeI2, StandbyServerNetmask_PyNodeI0, StandbyServerNetmask_PyNodeI1, StandbyServerNetmask_PyNodeI2, StandbyServerDev_PyNodeI0, StandbyServerDev_PyNodeI1, StandbyServerDev_PyNodeI2. If the values of PostgresStandbyServerPort, StandbyServerPostgresDataDir, StandbyServerPostgresBinDir, PostgresUsername don’t match with your machines, please correct them.

- Open etc/asp.conf to change images configurations: node_addr, link_name... if necessary

The safplus can be started now.

[edit] Work with model

- Import the model into the OpenClovis IDE

- Open the postgresql component model

- Open PostgresqlCSI definition. Correct the values that has been setup at II.1.Preparation steps for each key. You have to input those values below (you need to do for PyNodeI0, PyNodeI1 & PyNodeI2):

- ActiveServerIP: the active virtual IP. All write (or read also) SQLs must be through it

- ActiveServerNetmask: subnet mask for the active server

- ActiveServerDev: the ethernet device interface for the active server

- StandbyServerIP_PyNodeI0: the virtual IP for standby server on which the first image (PyNodeI0) is deployed. All read only SQLs can be through it. Change PyNodeI0,PyNodeI1,PyNodeI2

- StandbyServerServerDev_PyNodeI0: the ethernet device interface for the first standby server

- StandbyServerServerNetmask_PyNodeI0: the subnet mask for the first standby server

- StandbyServerUsername_PyNodeI0: the user control the postgreSQL operations on first standby server. Notice that the username here must be the user specified at running configure script (see II.2.3) with option --with-username. For example, if --with-username=postgres, then the value for this key is postgres

- PostgresStandbyServerPort_PyNodeI0: the port number the postgreSQL installed on the first standby server listens (default: 5432)

- StandbyServerUsername_PyNodeI0: the username of the postgreSQL installed on the first standby server. This user should be a super user, it has at least read, write, replicate privilege on that database

- StandbyServerPostgresDataDir_PyNodeI0: the full path to the postgreSQLdatabase cluster installed on the first standby server

- StandbyServerPostgresBinDir_PyNodeI0: the full path to the postgreSQLexecutable files (commands) installed on the first standby server

- StandbyServerPostgresTriggerFile_PyNodeI0: specify trigger file name (include full path and user-defined filename) on the first standby server. Notice that the path here must be the path specified at running configure script (see II.2.3) with option --with-trigger-file-dir. For example, if --with-trigger-file-dir=/home/postgres/trigger and user-defined trigger file name is pgsql.trigger.5432, then the value for this key is /home/postgres/trigger/pgsql.trigger.5432. This trigger file will be removed automatically after failover

- PostgresRecoveryTemplateConfFile_PyNodeI0: specify recovery file name (include full path and recovery.conf) on the first standby server. Notice that the path here must be the path specified at running configure script (see II.2.3) with option --with-recovery-template-dir. For example, if --with-recovery-template-dir=/home/postgres/recoverytemplate, then the value for this key is /home/postgres/recoverytemplate/recovery.conf.

- PostgresDatabaseName: the name of the postgreSQL database. Both active postgreSQL and the standbys use one

- After correcting all values, regenerate source by Generate Source command from menu Project:

- Do backup source code

- Do merge source code

When generating source code compleles, copy all files from backup/app to src/app

- Add or remove images as your decision

- Build the model

- Make images. You can change the network interface for each image as your system

- Deploy system controllers (SCNodeI0 & SCNodeI1) and PyNodeIx on separate machines

- Start safplus under root for system controllers first then start PyNodeIx. The application must be run under root because only root can open port and set/clear the virtualIP address

[edit] PostgreSQL tutorial model

[edit] Introduction

This is the model which shows the user how to use the postgreSQL high availability. Refer to picture at the first page of this document for a good understand how the system works.

[edit] Step by step to run the tutorial model

- To make the tutorial work, the PostgreSQL working model (section A) have to be configured and work first

- Open the TutorialCSI. Enter some paramters as in the previous section

- Generate source code

- Edit the source code. All source code is put in clCompAppMain.c of Tutorial package. The current source code interacts with a sample database. Because we don’t know what table in your database is, so you have to edit the source code to be suitable for your database. What you have to do is in 2 functions:

- cleanDb(): this function demonstrates a deletion of all records in a table. This deletion is only permitted on master (active) postgres server. You must change the table name in SQL DELETE statement:

res = PQexec(conn,"DELETE FROM weather"); - writeDb(): this function demonstrates an insertion of some records into a table. This is only permitted on master (active) postgres server. You must change the table name in SQL INSERT statement:

res = PQexecParams(conn,"INSERT INTO weather values($1,$2,$3)",

as well as parameters: names, types... (refer to the sample code to see how to do that)

- cleanDb(): this function demonstrates a deletion of all records in a table. This deletion is only permitted on master (active) postgres server. You must change the table name in SQL DELETE statement:

- Build and make images

- Deploy PyTutorialI0 and PyTutorialI1 on separate machines and start safplus on them. You’ll see the machine which contains the active CSI deletes records from a table and insert some new records to it. And the machine which contains the standby CSI continues reading records from the table on the standbys in turn

[edit] Appendix

[edit] Changing the model’s configuration permanently

Open src/config/clAmfDefinitions.xml and correct the values:

<csiType name="PostgresqlCSI">

<rank>0</rank>

<nameValueLists>

<nameValue name="ActiveServerIP" value="192.168.56.120"/>

<nameValue name="ActiveServerNetmask" value="255.255.255.0"/>

<nameValue name="ActiveServerDev" value="eth0:1"/>

<nameValue name="StandbyServerIP_PyNodeI0" value="192.168.56.121"/>

<nameValue name="StandbyServerNetmask_PyNodeI0" value="255.255.255.0"/>

<nameValue name="StandbyServerDev_PyNodeI0" value="eth0:1"/>

<nameValue name="PostgresStandbyServerPort_PyNodeI0" value="5430"/>

<nameValue name="StandbyServerIP_PyNodeI1" value="192.168.56.122"/>

<nameValue name="StandbyServerNetmask_PyNodeI1" value="255.255.255.0"/>

<nameValue name="StandbyServerDev_PyNodeI1" value="eth0:1"/>

<nameValue name="PostgresStandbyServerPort_PyNodeI1" value="5432"/>

<nameValue name="StandbyServerIP_PyNodeI2" value="192.168.56.123"/>

<nameValue name="StandbyServerNetmask_PyNodeI2" value="255.255.255.0"/>

<nameValue name="StandbyServerDev_PyNodeI2" value="eth0:1"/>

<nameValue name="PostgresStandbyServerPort_PyNodeI2" value="5432"/>

<nameValue name="PostgresDatabaseName" value="mydb"/>

<nameValue name="StandbyServerUsername_PyNodeI0" value="pgres"/>

<nameValue name="StandbyServerUsername_PyNodeI1" value="tae"/>

<nameValue name="StandbyServerUsername_PyNodeI2" value="tae"/>

<nameValue name="StandbyServerPostgresDataDir_PyNodeI0" value="/usr/local/pgsql/data"/>

[edit] Edit src/target.conf if you want to change the image configuration such as slot number, LINK (network interface)...

TRAP_IP=127.0.0.1

INSTALL_PREREQUISITES=YES

INSTANTIATE_IMAGES=YES

CREATE_TARBALLS=YES

TIPC_NETID=1340

SLOT_SCNodeI0=1

SLOT_PyTutorialI0=6

SLOT_PyTutorialI1=7

SLOT_SCNodeI1=2

SLOT_PyTESTI0=8

SLOT_PyNodeI0=3

SLOT_PyNodeI1=4

SLOT_PyNodeI2=5

LINK_SCNodeI0=eth1

LINK_PyTutorialI0=eth1

LINK_PyTutorialI1=eth1

LINK_SCNodeI1=eth1

LINK_PyTESTI0=eth1

LINK_PyNodeI0=eth1

LINK_PyNodeI1=eth1

LINK_PyNodeI2=eth1

ARCH=x86_64/linux-2.6.32